Introduction:

The rapid advancements in artificial intelligence (AI) have brought transformative changes across various sectors, with AI-driven models like OpenAI’s ChatGPT leading the charge. However, alongside these technological strides, concerns about data accuracy and privacy have also risen to the forefront. Recently, ChatGPT has come under scrutiny from the European Union (EU) privacy watchdogs regarding its data accuracy and compliance with stringent data protection regulations. This blog explores the nuances of this scrutiny, its implications for AI development, and the broader impact on data privacy and user trust.

Understanding ChatGPT and its Data Handling:♣

What is ChatGPT?

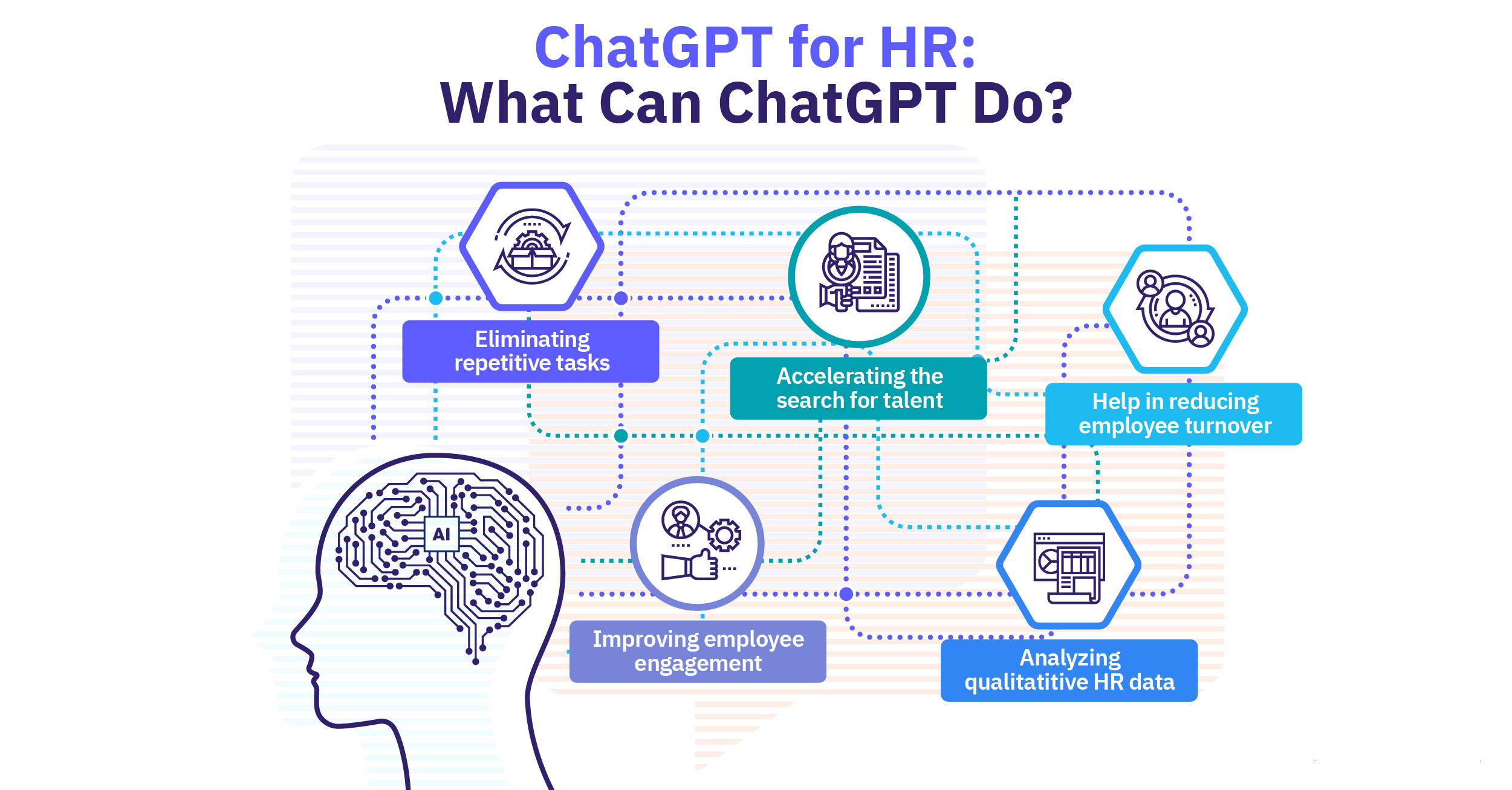

ChatGPT is an AI language model developed by Open AI, It leverages a massive dataset derived from diverse internet sources to produce coherent and contextually relevant responses. This capability makes ChatGPT useful in various applications, including customer service, content creation, and conversational agents.

How does ChatGPT handle data?

ChatGPT’s functionality relies on processing vast amounts of textual data. During its training phase, it learns from a large corpus of text, identifying patterns, structures, and contextual cues. Once deployed, the model processes real-time inputs from users to generate appropriate responses. Importantly, OpenAI has implemented measures to handle data responsibly, including anonymizing user data and incorporating safety mitigations to prevent the generation of harmful content.

The EU’s Privacy Concerns:

Data accuracy and reliability:

One of the primary concerns raised by the EU privacy watchdog is the accuracy of the data processed by ChatGPT. Given that the model is trained on internet data, which can be inherently unreliable and biased, the accuracy of its outputs can vary. Incorrect or misleading information generated by ChatGPT could have serious implications, especially if used in sensitive areas like healthcare, legal advice, or financial services.

Compliance with GDPR:

The General Data Protection Regulation (GDPR) is the EU’s cornerstone regulation for data protection and privacy. GDPR emphasizes transparency, data accuracy, and user consent. The EU privacy watchdog is concerned about whether ChatGPT’s data handling practices align with these regulations, particularly in terms of ensuring data accuracy and enabling users to correct inaccuracies.

Transparency and Accountability:

The EU watchdog questions whether users are adequately informed about how their data is used and whether they have control over it. Additionally, there is a need for mechanisms to hold AI systems accountable for their outputs, especially when inaccuracies occur.

Implications for AI Development Transparency in AI operations is crucial for building trust and accountability.:

Enhancing data accuracy:

Improving data accuracy in AI models like ChatGPT involves several strategies. These include refining training datasets to minimize biases, implementing more robust data validation processes, and continually updating the model with accurate and reliable data sources. Enhanced data accuracy not only improves the model’s performance but also aligns with regulatory requirements.

Strengthening user controls:

To comply with GDPR, it is essential to provide users with greater control over their data. This can be achieved by implementing features that allow users to review and correct the information generated by the AI. Moreover, transparent data usage policies and clear communication about data handling practices can help build user trust.

Ethical AI Practices:

Developing ethical AI systems is paramount. This involves not only complying with regulations but also adhering to principles of fairness, accountability, and transparency. Establishing independent ethics boards, conducting regular audits, and engaging with stakeholders, including users and regulatory bodies, can help ensure that AI systems are developed and deployed responsibly.

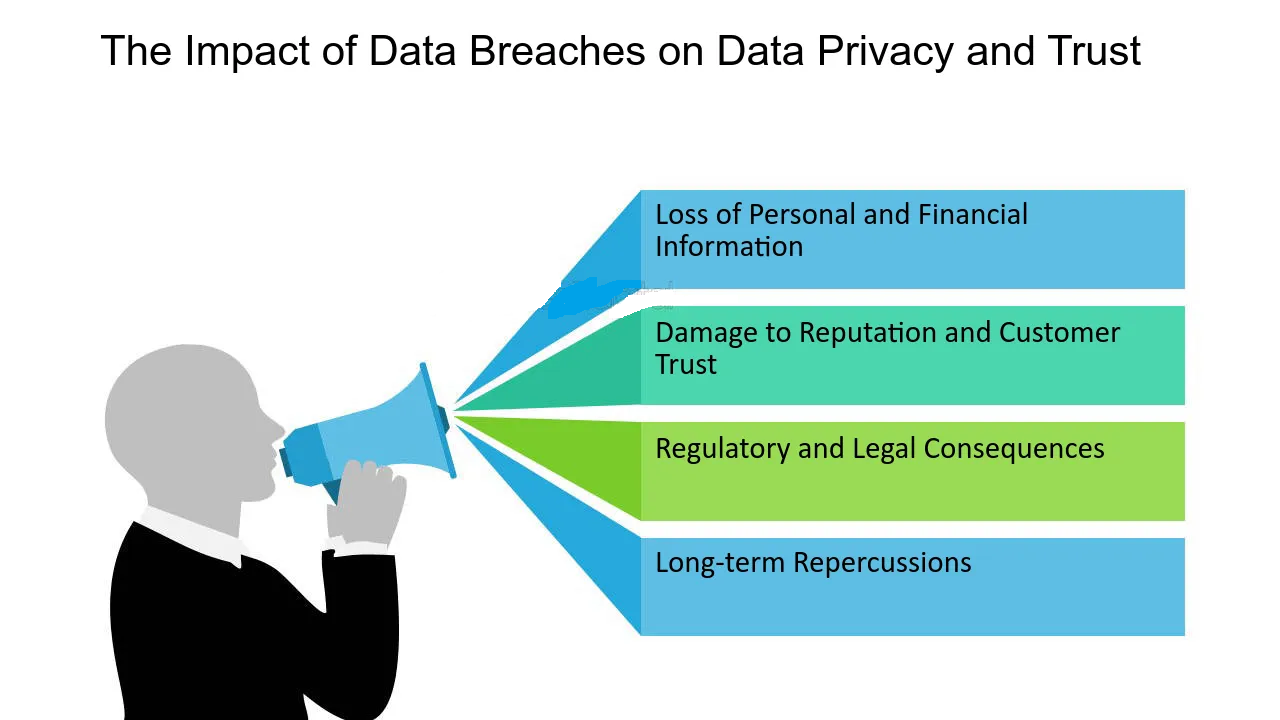

Broader Impact on Data Privacy and User Trust:

The Role of Regulatory Bodies:

Regulatory bodies like the EU privacy watchdog play a critical role in overseeing the development and deployment of AI technologies. Their scrutiny ensures that AI systems adhere to established data protection standards, protect user privacy, and promote ethical practices. This oversight is vital for preventing the misuse of AI and ensuring that technological advancements do not come at the expense of fundamental rights.

Building user trust:

User trust is the cornerstone of successful AI adoption. Transparent practices, robust data protection measures, and responsiveness to user concerns are essential for building and maintaining this trust. When users are confident that their data is handled responsibly and that they have control over their information, they are more likely to engage with AI technologies.

Innovation and Regulation Balance\;

While regulation is necessary to ensure ethical practices and protect user rights, it is also important to strike a balance that does not stifle innovation. Collaborative efforts between regulators, AI developers, and other stakeholders can help create a regulatory environment that fosters innovation while safeguarding privacy and data accuracy.

Moving Forward: Strategies for Compliance and Innovation

Collaborative Development:

Engaging in collaborative development efforts with regulatory bodies, industry experts, and user groups can help ensure that AI systems are both innovative and compliant with data protection standards. This collaboration can lead to the development of best practices and frameworks that guide responsible AI development.

Continuous monitoring and improvement:

AI systems should be subject to continuous monitoring and improvement. Regular audits, user feedback, and real-time performance evaluations can help identify areas for enhancement and ensure that the system remains accurate and compliant. Continuous improvement is key to adapting to evolving regulations and user expectations.

Investing in AI EthicsL:

Investing in AI ethics involves not only complying with regulations but also proactively addressing potential ethical concerns. This includes developing AI models that are transparent, accountable, and designed with user privacy in mind. Ethical AI development can differentiate companies in the marketplace and build long-term user trust.

Conclusion:

The scrutiny faced by ChatGPT by the EU privacy watchdog underscores the critical importance of data accuracy and compliance with data protection regulations in AI development. As AI technologies continue to evolve, ensuring that they align with the principles of transparency, accountability, and user privacy is paramount. By adopting robust data handling practices, enhancing user controls, and engaging in ethical AI development, companies can navigate regulatory challenges and build trust with their users. The ongoing dialogue between regulators, developers, and users will be crucial in shaping the future of AI in a way that is both innovative and responsible.